TL;DR - Large language models only see the world in one dimension, so stop using them to think.

What does a summer's day feel like?

Considering this question likely brings to mind a series of sensations, emotions and memories, based on previous summer days you've experienced.

Encoded primarily in the hippocampus, these prior experiences are consolidated over time, often during sleep, and then distributed across multiple brain regions. They might be influenced by books you've read or dreams you've had; fact and fiction seamlessly blended together.

It's hard to express these feelings in words. The greatest poets, writers and artists have struggled with it. One of the best attempts I've seen is by Emily Dickinson:

A sepal, petal, and a thorn

Upon a common summer’s morn—

A flask of Dew—A Bee or two—

A Breeze—a caper in the trees—

And I’m a Rose!

But even this falls short. The experience of a summer's day is so much more than the sum of its parts.

One dimensional summers

What does a large language model (LLM) think a summer's day feels like?

A summer's day feels like golden warmth on your skin, the scent of sun-kissed grass, and a lazy breeze whispering through the trees. The air hums with cicadas, laughter, and distant waves.

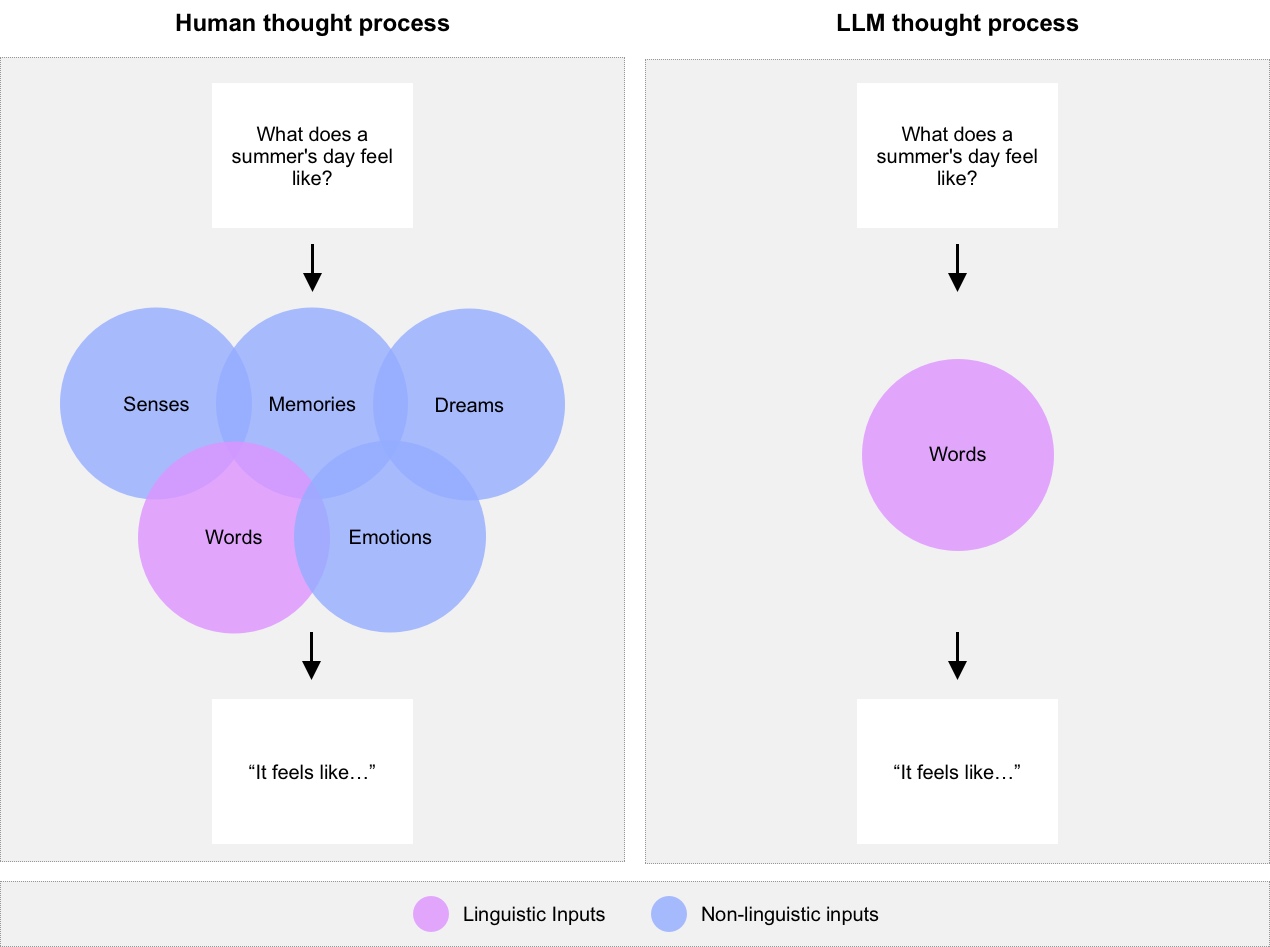

This is a linguistically accurate description, but the process to get to the output is fundamentally different from Dickinson's. Rather than processing a complex web of sensory inputs, emotions and memories, the LLM uses previous text data to generate a plausible description. It's a simulation of the experience, based on the text it has been trained on. It's a hallucination, not a synthesis of real world experience.

A beautiful sunset, the sound and smell of rain, the relentlessness of grief - we feel this stuff, but could we describe it in words? We can try, but it would capture 0.1% of the whole. An LLM only has access to this minute part of the human experience. Which is clear when you ask an LLM what a summer's day looks like:

The limits of language, and why it matters

Humans have evolved over millennia to process multiple data streams at once to make sense of the world. Yet we are now increasingly relying on inputs from LLMs that are by definition one dimensional. Why is this important? Because the way we get to language matters.

Even LLM companies themselves realise this. Anthropic, on every job posting, forces applicants to agree with their AI Policy for Application which states:

While we encourage people to use AI systems during their role to help them work faster and more effectively, please do not use AI assistants during the application process. We want to understand your personal interest in Anthropic without mediation through an AI system, and we also want to evaluate your non-AI-assisted communication skills. Please indicate 'Yes' if you have read and agree.

The key word in the above statement is "mediation". LLMs mediate our understanding of the world. They are a filter, a lens, a distortion. It's not a technical limitation that prevents LLMs from thinking like us, but a philosophical ones. They can't experience the world like us, and therefore cannot think like us.

For years the field of AI has been obsessed with the Turing test: can a machine generate text that is indistinguishable from a human's? But this is the wrong question. The real question is: can a machine think like a human?

The importance of the thinking process, not just the output, is something that is often overlooked in the rush to deploy LLMs. But it's a crucial distinction. Ethically and legally. The European Union's Artificial Intelligence Act talks specifically about transparency in AI systems, which means "that AI systems are developed and used in a way that allows appropriate traceability and explainability".

Don't outsource your thinking to an LLM. They can't think like you. They can't feel like you. They can't dream like you. Even though they can write like you.